Handling a million requests

Have you ever had the sudden urge to spam a server with thousands of requests?

No?

What about a Million requests?

I set out to find the amount of requests per second a server could handle, using various ec2 instances, techniques and protocols at a budget.

If you would like to follow along in this experiment here is the GitHub repo

We will perform various experiments each with different variables to see which performs best. (These experiments were performed on AWS, though you can do them on any platform that supports docker including locally)

We will be running a simple Nodejs server using express, that returns a JSON response containing a simple user object.

Experiment 1

Just your regular Node.js Express server deployed onto a server, no docker, no load balancer.

- Clone the repo above.

- Install nodejs and npm in your server

- Run npm install to install dependencies

- Start the server using

node index.js

To perform the test you need to install k6 ( a testing client) on another machine or the same computer. Installing k6 on the same machine as the client will affect test results.

Once installed you can run this command to perform the test from the same machine

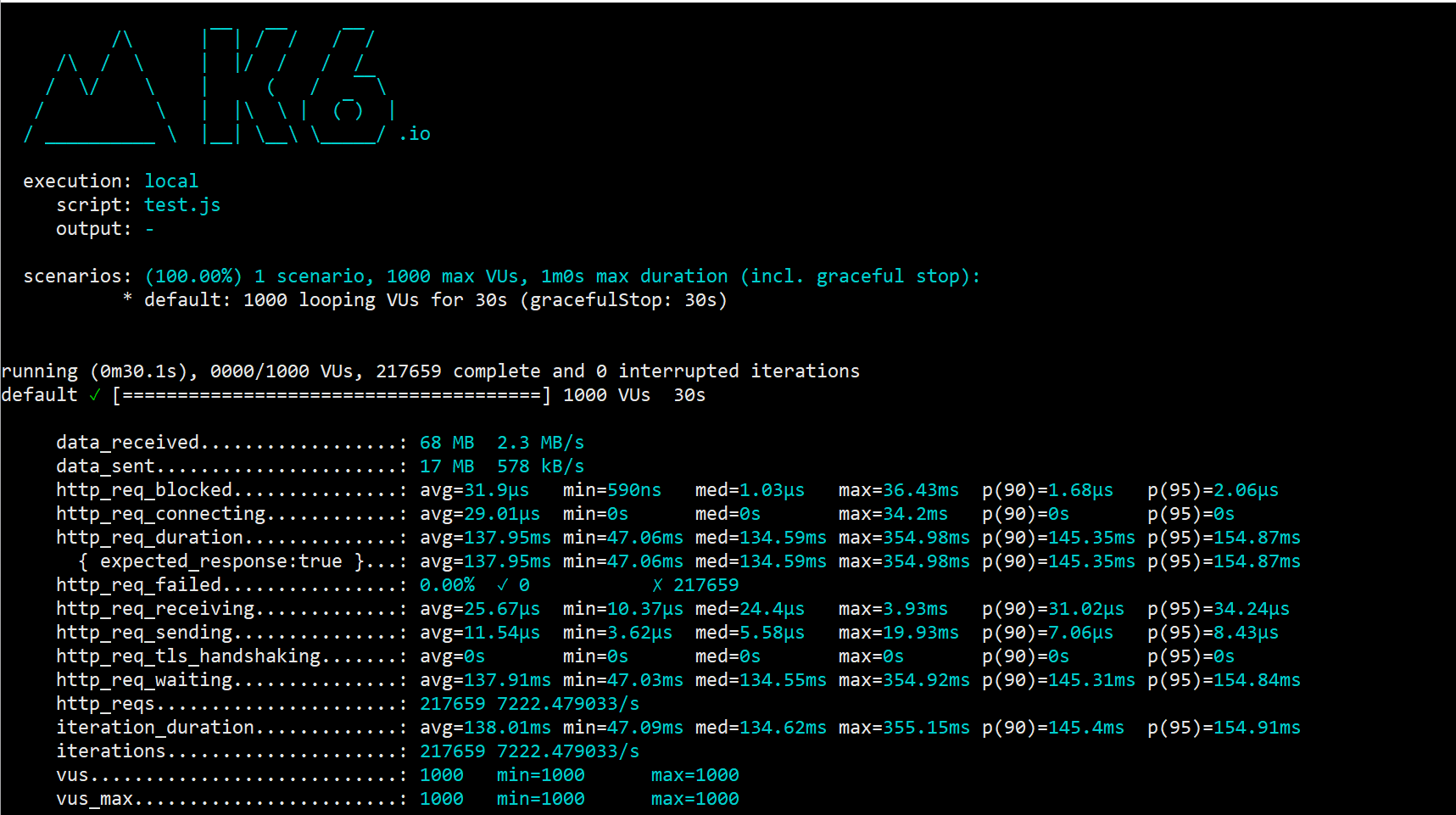

k6 run http-tests/local.js --duration=30s --vus=32

vus stands for virtual users, you can experiment with this value, based on your hardware you will get different values for RPS (requests per second).

When increasing the number of virtual users you may have to increase the limit on concurrent file descriptors being used by running ulimit -n 250000 this will increase it to 250,000 which you probably won't reach.

To perform the test from another machine, install k6 and clone the repo, then change the REMOTE_HTTP_HOST variable in the config.js file to be where your server is hosted.

k6 run http-tests/remote.js --duration=30s --vus=32

From the results, you can see you get about 7K requests per second, about 420K requests per minute. This was performed on a c5.4xlarge.

Not bad, but we can do better.

Experiment 2

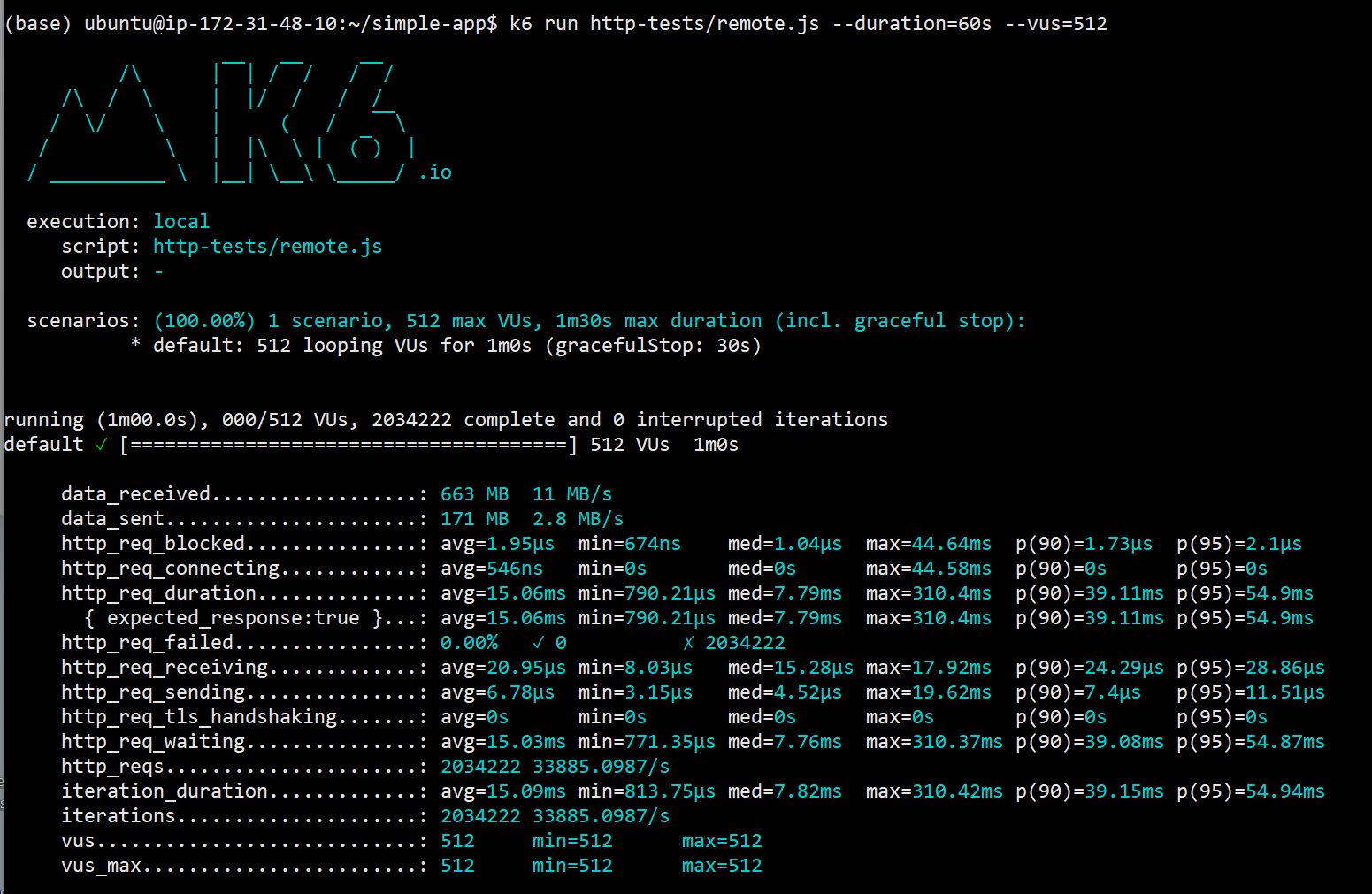

In the above experiment, you will discover that resource utilisation is fairly low. Meaning that we are not taking advantage of every CPU cycle availed to us.

Here we are going to use docker to spin up 8 containers. ( found 8 containers work best for c5.4xlarge )

- Clone the repo above.

- Install docker and docker-compose on your machine

- Build the docker images using

bash build.sh

- Start the server using

bash starthttp.sh

Run the same commands, as Experiment 1. Remember to change REMOTE_HTTP_HOST in config.js

This was performed on a c5.4xlarge.

From the results, you can see you get about 34K requests per second, about 2M requests per minute. That's right we were able to handle more than a million requests per minute, 2 MILLION to be exact and fairly low budget. (about 50 cents an hour)

Almost 5 times as much without using load balancing and docker. This time we were able to fully utilise all CPUs as Haproxy spreads out the load.

If you are using AWS for this experiment you can set up two c5.4xlarge , one as the server and the other as the k6 client in the same VPC to avoid latency and egress charges. Also, don't forget to terminate the instances in the end.

Next up were going to see how to lower our bandwidth, by reducing the size of our payload using PROTO BUFS and maybe in future with a slightly higher budget we can hit 1M RPS or more.